Facilities

- Home

- Facilities

facilities

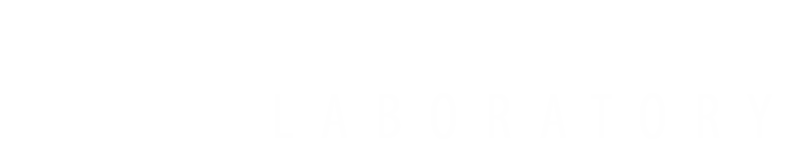

The IDS Scaled Smart City (IDS3C)

A fundamental component of research at IDS Lab involves studying safe and efficient decision-making for connected and automated vehicles (CAVs) in emerging mobility systems. To facilitate this work, IDS Lab has developed the Information and Decision Science Lab Scaled Smart City (IDS3C) (Fig. 2), which occupies a 400 square feet area and includes 70 robotic cars at a 1:25 scale and 12 quadcopters. IDS3C can replicate real-world traffic scenarios in a small and controlled environment. This testbed can help us prove concepts beyond the simulation level and understand the implications of errors/delays in vehicle-to-vehicle and vehicle-to-infrastructure communication, as well as their impact on energy usage. IDS3C is equipped with a VICON motion capture system for vehicle localization. A central mainframe computer (with Intel(R) Xeon(R) w9-3475X CPU) is responsible for obtaining information from the VICON system and computing the desired trajectories for the CAVs using the implemented algorithm. The desired trajectories from the mainframe computer are transmitted to the Raspberry Pi embedded onboard the robotic cars via the UDP/IP protocol.

The primary goal of the IDS3C is to implement and validate real-time control frameworks for coordinating CAVs in different traffic scenarios, such as crossing unsignalized intersections, merging at roadways and roundabouts, or platooning on highways. By validating the control frameworks in an experimental testbed, we can evaluate the implications of CAV deployment in terms of safety guarantees, throughput maximization, and energy savings before transitioning to full-size vehicle validation. IDS3C has 12 quadcopters, known as Crazyflies, which are used to study the coordination between ground and aerial vehicles, such as in last-mile delivery and formation control tasks.

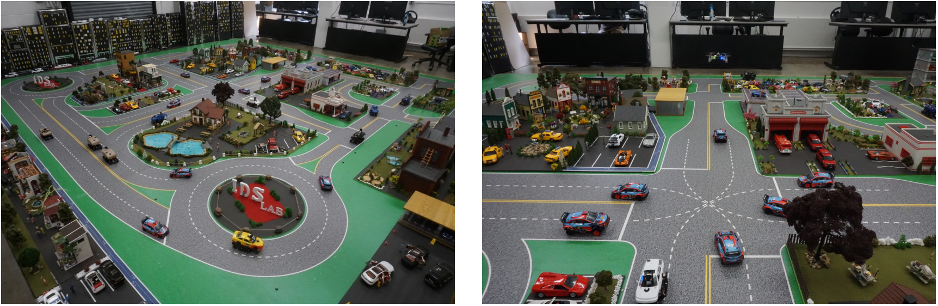

Since CAVs are expected to penetrate the market gradually. Achieving 100% CAV deployment in transportation systems is a distant goal, so it is imperative to consider their operation in mixed traffic where human-driven vehicles (HDVs) co-exist with CAVs. To accommodate studies that involve interactions between HDVs and CAVs, IDS3C has 6 driving emulators that are connected to computers, allowing human participants to control the robotic cars manually, as shown in Fig. 3. Human participants observe the environment through a camera mounted on the robotic cars and make decisions by adjusting the speed and steering angle. These signals are received by the computers and transmitted in real-time to cars. This integration of driving emulators allows us to study several problems related to mixed traffic, such as learning online human driving behavior, understanding the interaction between HDVs and CAVs, and addressing the planning and control problems for CAVs in mixed traffic. Moreover, the testbed and driver emulators can be used to validate some features of advanced driver-assistance systems (ADAS), such as automatic lane keeping or adaptive cruise control, which facilitate and improve the driving experiences for the human participants in the IDS3C.

Robotic CAVs for the Scaled Smart City

The 1:25 scaled robotic CAVs deployed in IDS3C to simulate real vehicles on the roads have been developed and created by students at the IDS Lab. These miniature cars, shown in Fig. 4, provide a cost-effective and flexible platform to test driving algorithms under different circumstances. The latest version of IDS3C’s robotic cars employs commercial chassis equipped with differential gear and four-wheel drive, providing realistic maneuvers. Each car has a sophisticated control system comprising two components: a Raspberry Pi for high-level decision-making and wireless communication and an Arduino board for low-level control functions. The Arduino is responsible for precisely controlling the car’s actuators, including a servo motor for lateral steering and a brushless DC (BLDC) motor for longitudinal movement. This dual-processor approach allows for efficient task distribution, with the Raspberry Pi handling complex computations and the Arduino managing real-time motor control.

Virtual Reality Platform with Large Language Model Integration

A rapidly growing area of research at the IDS Lab is studying the influence of human participants in intelligent transportation systems. This involves improving the safety, utility, and efficiency of CAVs in the preference of HDVs and when serving the diverse needs of human passengers. To capture both the behavior and the experience of humans in intelligent transportation systems, IDS Lab has developed a virtual reality (VR) platform, shown in Fig. 5. This VR platform can be utilized to capture data of human-driving in the presence of CAVs in realistic traffic scenarios, facilitating improved modeling of HDVs and the safe control of CAVs. Furthermore, by deploying a CAV’s control algorithm in the VR platform, researchers can capture the experience of a human passenger in a CAV, allowing them to safely improve algorithms in a human-centric approach.

Microsimulation Platforms

The research at IDS Lab spans multiple scales of transportation systems, from the study of transportation networks to the interactions between individual vehicles across a broad range of traffic management applications. For the evaluation of various routing, planning, and control strategies developed at the IDS Lab for intelligent transportation systems, researchers utilize numerous microsimulation platforms, such as Simulation of Urban MObility (SUMO), PTV Vissim, and MATLAB.

Simulation of Urban MObility (SUMO) is an open-source microscopic traffic simulation platform designed for detailed modeling and analysis of urban traffic scenarios. It allows for the simulation of complex and large road networks over various transportation aspects, such as vehicle movement, signal control, public transport etc. SUMO enables users to visualize and observe traffic flows, vehicle movements and modify the simulation parameters in real-time through its Graphical User Interface (sumo-gui). Another significant feature that SUMO provides is its ease of customization and integration with other software tools via its Application Programming Interface (API). Finally, its Traffic Control Interface (TraCI) allows users to dynamically control vehicles or traffic lights behavior, by integrating their own frameworks, and gather data while the simulation is running. The IDS Lab has also utilized PTV Vissim software in similar applications. PTV Vissim, while not open source, offers 24-hour customer support, incorporates cutting-edge transportation models, and provides improved visualization of the results.

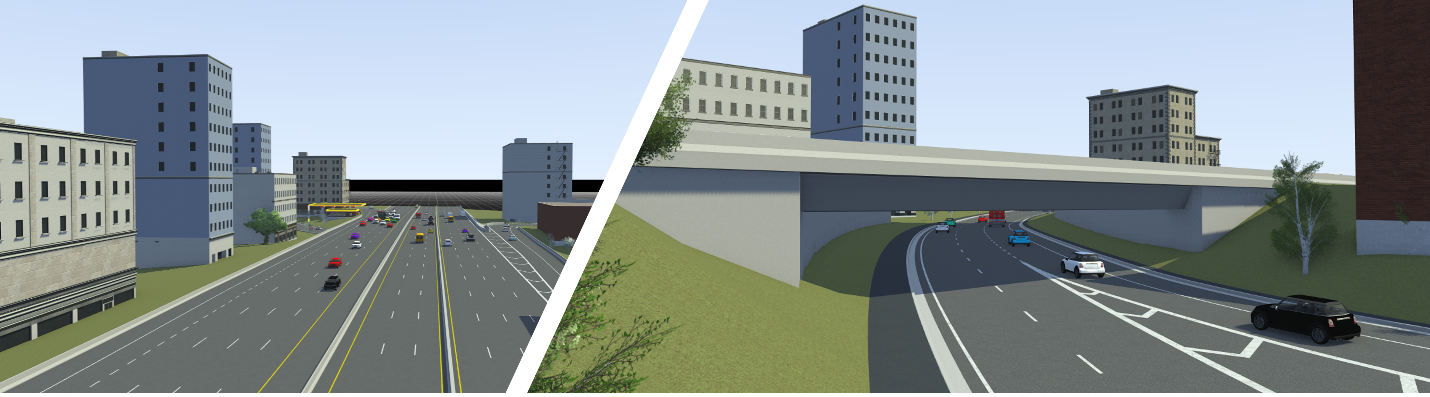

The IDS Lab also extensively utilizes MathWorks tools like the Automated Driving Toolbox and RoadRunner to create realistic transportation scenarios in simulation. The Automated Driving Toolbox offers detailed scenario selection and integration with MATLAB and Simulink for dynamically controlling vehicles, while providing realistic visualizations through Unreal Engine. RoadRunner, excels in scene creation with assets for bridges, multi-level roads, and diverse road materials, among others (Fig. 7). RoadRunner facilitates the import of external files for specific scene creation aligned with real-world metrics, such as Times Square in New York City, enabling researchers at IDS Lab to implement their algorithms in realistic transportation networks. Furthermore, RoadRunner scenes can be exported to CARLA and integrated with IDS Lab’s VR platform and other control testing tools.

Finally, the IDS Lab utilizes MATLAB in connection with the other MathWorks products described above. To facilitate research at the cutting edge of decision-making in intelligent transportation, advances tools from MATLAB such as the Reinforcement Learning Toolbox can be used to train policies, and the results can be integrated into RoadRunner and the Automated Driving Toolbox.

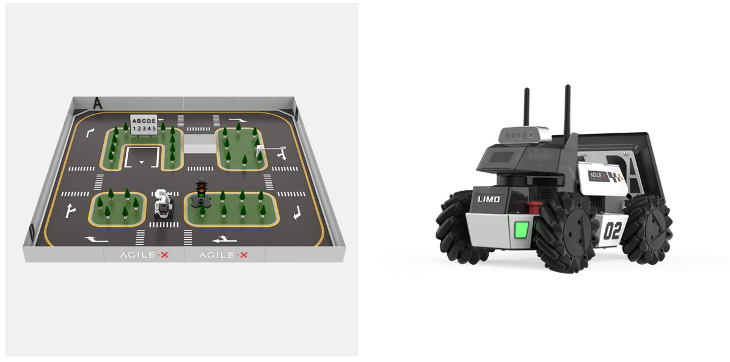

Limo Pro Robotic Cars

A growing component of the research at the IDS lab is deploying their algorithms in realistic settings, accounting for environmental uncertainties, imperfect perception, and computational challenges. To facilitate this research, the IDS Lab owns 6 LIMO PRO robotic cars, and an associated simulation table produced by Agile Robotics, shown in Fig. 8. Each LIMO PRO integrates the NVIDIA Orin Nano to ensure powerful computing capabilities, enabling stable multi-sensor data fusion, SLAM mapping, and the efficient deployment of deep-learning models. These capacities are utilized alongside the EAI T-mini Pro LiDAR and Orbbec Dabai depth camera to perceive a variety of complex environments. The hardware of these robotic cars ensures robust implementation of algorithms across applications requiring autonomous localization, navigation planning, multi-agent coordination, visual recognition, and dynamic obstacle avoidance. Each LIMO PRO supports both ROS and Gazebo platforms and can be programmed using mainstream languages like Python and C++ to implement and execute algorithms. This ability to customize their behavior and the open-source nature of the code allows the lab to use them in various intelligent transportation applications. The large battery life of these cars also enables their deployment to conduct experiments with motion planning, navigation in crowded environments, and routing for last-mile delivery.